Artificial General Intelligence: Ukraine's War Shows The Real Risks

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Artificial General Intelligence: Ukraine's War Shows the Real Risks

The war in Ukraine, a brutal conflict marked by unprecedented technological deployment, has inadvertently highlighted the terrifyingly real risks associated with the development of Artificial General Intelligence (AGI). While AGI itself remains largely theoretical – a hypothetical AI with human-level intelligence and adaptability – the conflict showcases the potential for existing AI systems to be weaponized and misused, offering a chilling glimpse into a future dominated by advanced, autonomous weaponry.

This isn't about killer robots in the Terminator sense, at least not yet. The current risks are more subtle, yet arguably more insidious. The Ukrainian conflict demonstrates the dangers of:

1. Autonomous Weapons Systems and the Erosion of Human Control

Drones, both commercially available and military-grade, have played a significant role in the war. While currently operated by humans, the trend is towards increasing autonomy. The potential for malfunction, hacking, or unintended escalation with fully autonomous weapons systems is a major concern. A system designed for one purpose could, through unforeseen circumstances or a simple software glitch, cause catastrophic damage. This lack of human oversight is arguably the most significant risk highlighted by the war. Further research into the ethical implications of Lethal Autonomous Weapons Systems (LAWS) is critically needed. Organizations like the Campaign to Stop Killer Robots are actively campaigning for international regulations to prevent the development and deployment of such systems.

2. The Weaponization of Information and AI-Powered Disinformation Campaigns

The war has seen an unprecedented level of information warfare. AI-powered tools are used to generate deepfakes, spread propaganda, and manipulate public opinion. This highlights the potential for AGI to be used to create incredibly convincing disinformation campaigns, capable of destabilizing governments and inciting violence on a global scale. The ability to manipulate information at scale poses a serious threat to democratic processes and international security. Fact-checking initiatives and media literacy education are crucial to mitigating this risk.

3. Accelerated AI Development Driven by Military Needs

The urgency of the conflict has accelerated the development and deployment of AI technologies, pushing the boundaries of what's possible. While this can lead to innovations with civilian benefits, it also risks overlooking the ethical and safety considerations that should guide AGI research. The pressure to gain a military advantage might overshadow the long-term consequences of creating increasingly powerful and autonomous systems. This unchecked development path necessitates a global conversation about responsible AI development, ensuring ethical frameworks are in place before AGI becomes a reality.

4. The Increased Risk of Escalation and Unintended Consequences

The use of AI in warfare introduces complexities that can increase the risk of miscalculation and escalation. The speed and autonomy of AI-powered systems could lead to rapid escalation of conflict, potentially beyond human control. The opacity of some AI algorithms makes it difficult to predict their behavior, further increasing the likelihood of unforeseen and potentially disastrous consequences.

The war in Ukraine serves as a stark reminder that the development of AGI is not a purely theoretical concern. The risks are real, present, and demand urgent attention. International cooperation, ethical guidelines, and robust regulations are vital to ensure that the development and application of AI are guided by human values and prioritize safety and security. The future of AGI hinges on our ability to learn from the lessons of today's conflicts and chart a responsible path forward. Ignoring these risks could lead to unimaginable consequences. What steps do you think are necessary to mitigate these risks? Share your thoughts in the comments below.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Artificial General Intelligence: Ukraine's War Shows The Real Risks. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Murdock Uno Di Noi I Volontari Chiedono Giustizia

Jun 08, 2025

Murdock Uno Di Noi I Volontari Chiedono Giustizia

Jun 08, 2025 -

Lucass Arizona Game 2 Highlights Unc Athletics Perspective

Jun 08, 2025

Lucass Arizona Game 2 Highlights Unc Athletics Perspective

Jun 08, 2025 -

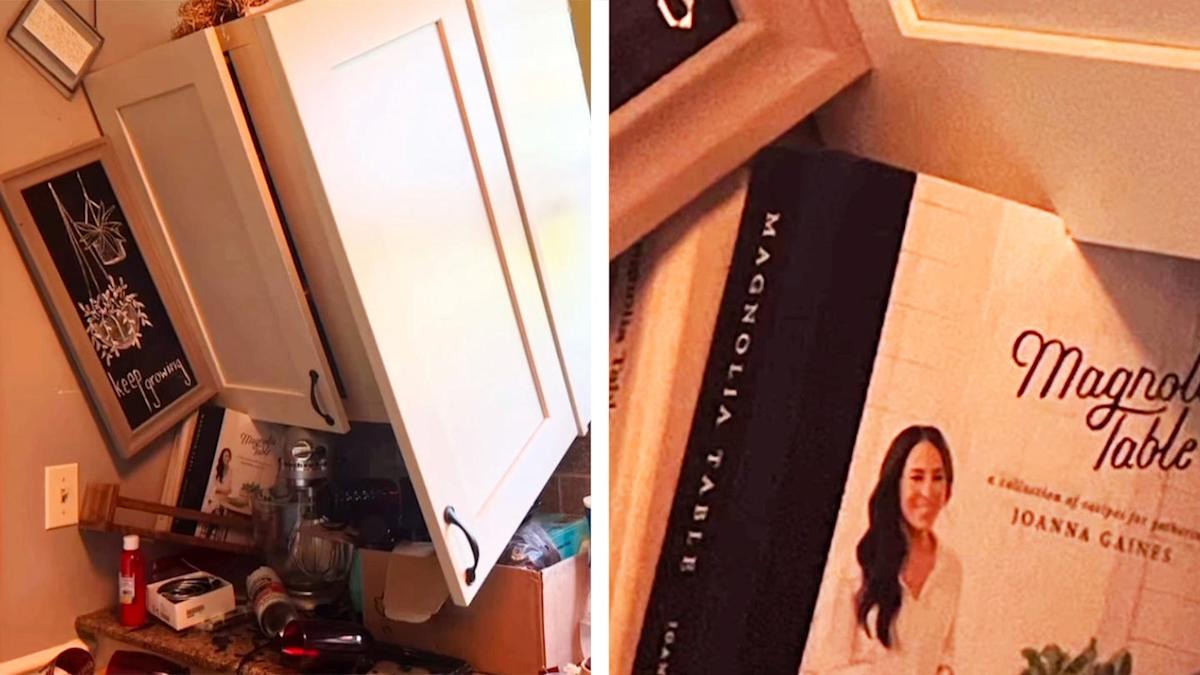

Joanna Gaines Cookbook Prevents Heirloom Dish Loss After Cabinet Collapse

Jun 08, 2025

Joanna Gaines Cookbook Prevents Heirloom Dish Loss After Cabinet Collapse

Jun 08, 2025 -

The Agassi Graf Legacy Their Childrens Journey Into Sports And Beyond

Jun 08, 2025

The Agassi Graf Legacy Their Childrens Journey Into Sports And Beyond

Jun 08, 2025 -

Canadian Open Leading Golfers Private Struggle Impacts Tournament

Jun 08, 2025

Canadian Open Leading Golfers Private Struggle Impacts Tournament

Jun 08, 2025